-

VMware Cloud Providers Feature Fridays

My colleague Guy Bartram had been leading over 30 VMware Cloud Providers focused sessions where he host a different expert on each. I was hosted previously on the Bitnami and App Launchpad Feature Friday previously. I highly recommend every VMware Cloud Provider to take a look at these sessions and subscribe to the VMware Feature…

-

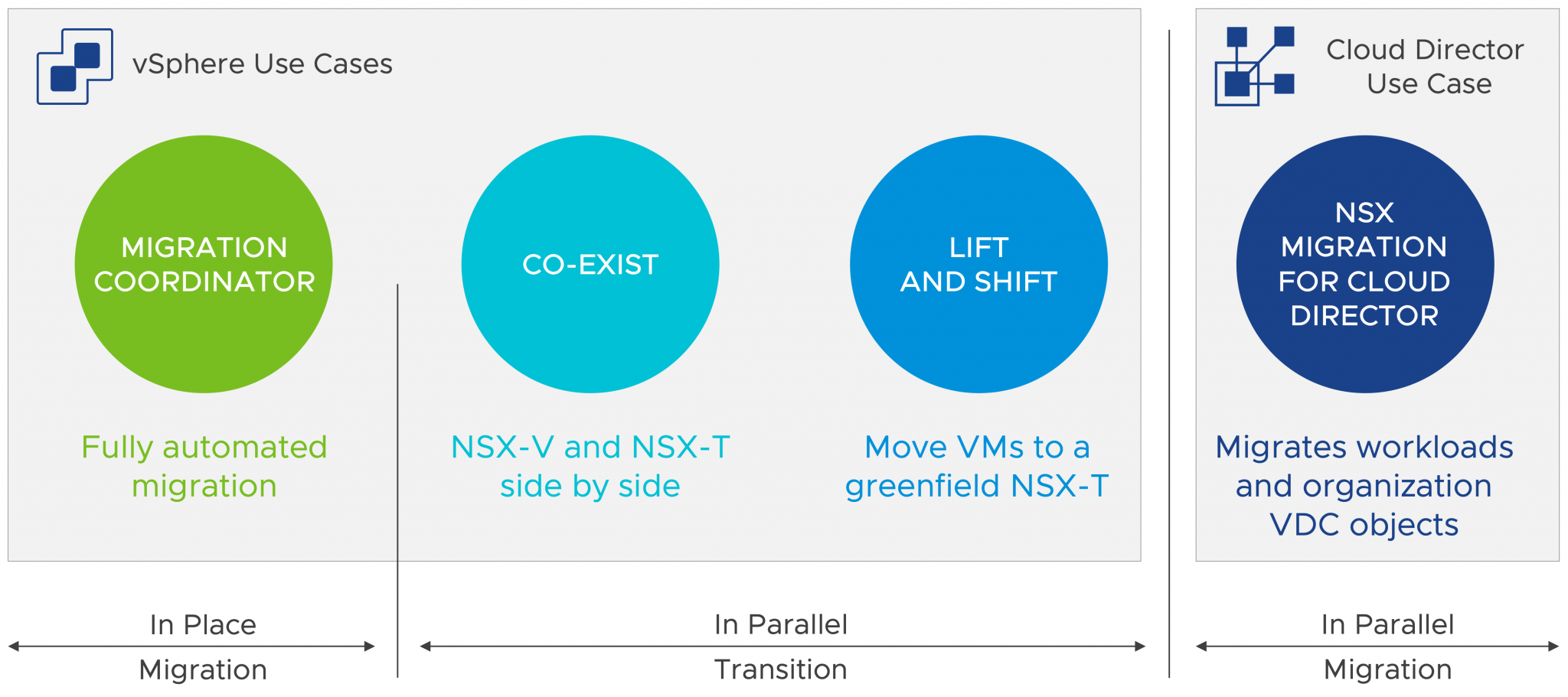

What’s new in NSX Migration for VMware Cloud Director 1.2

As most of you are aware by now, VMware announced the sunsetting of NSX for vSphere (NSX-V), and the current end of general support is targeted for January 2022, while the end of technical guidance will be in January 2023. It is important that Cloud Providers migrate from NSX-V to NSX-T as soon as possible. As…

-

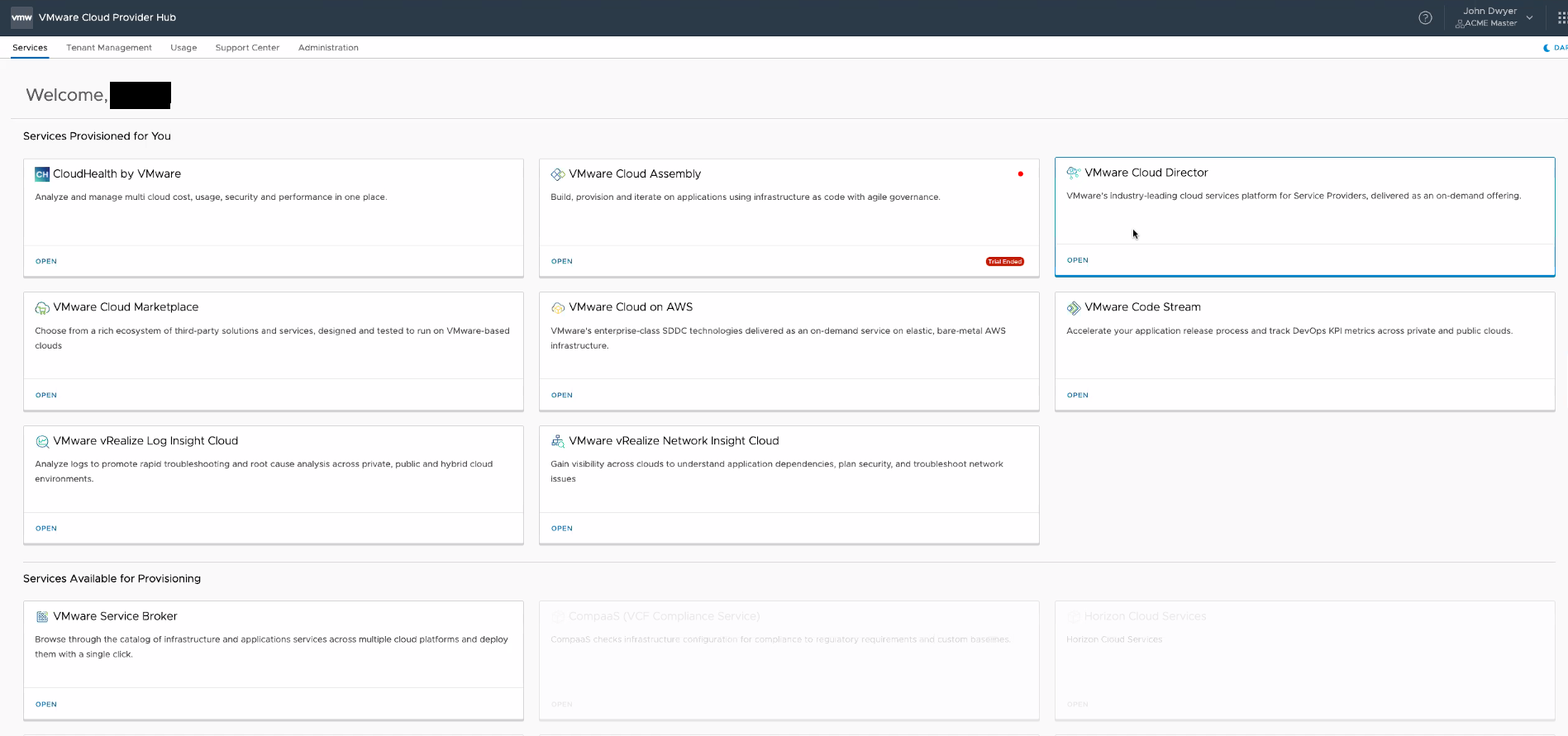

VMware Cloud Director Service Initial Availability

We have just announced the Cloud Director Service Initial Availability, where the first region would be VMC on AWS US West for now. There is a plan to expand this globally in the near future. Stay tune for a Cloud Director Service to be available in VMC on AWS region near you!! So what is…

-

Cloud VMware Cloud Flix Series for Cloud Providers

The More you Know, the Greater your Impact! The VMware Cloud Provider Team have created a series of 60-minute business and technical sessions that will enable you to capture business opportunities in a Multi-Cloud World. During these webinars, you will gain information and context to better understand the rapidly evolving Cloud Services market and how…

-

Cloud Director Kubernetes as a Service with CSE 2.6.x Demo

If you have been following our VMware Cloud Provider Space for a while, you have probably been introduced to our Cloud Director Kubernetes as a Service offering based on VMware Container Service Extension. In the past, Container Service Extension used to be command line only, where a nice UI was introduced in CSE 2.6.0. Here…

-

VMware Container Service Extension 2.6.1 Installation step by step

One of the most requested feature with previous versions of the VMware Container Service Extension (CSE) is to add a native UI to it. As of CSE 2.6 we have added a native UI to CSE, which is adding to the friendliness of CSE and will make it much more appealing to many of our…

-

Running vCD Cli fail with the following error: ModuleNotFoundError: No module named ‘_sqlite3’

After installing the VMware Container Service Extension, which install the vCD CLI in the process, vCD CLI kept failing to start and complaining about not finding sqlite3 module as showing below. I was installing on CentOS 8.1, but even then it sounds like the sqlite version included with CentOS is out of date for what…

-

CSE 2.6.1 Error: Default template my_template with revision 0 not found. Unable to start CSE server.

While trying to run my Container Service Extension 2.6.1 after a successful installation. I kept getting the following error when trying to run CSE “Default template my_template with revision 0 not found. Unable to start CSE server.” To fix this you will need to: Edit your CSE config.yaml file to include the right name of…

-

Cloud Director App Launchpad Demo

Cloud Director App Launchpad enables Service Providers to offer a marketplace of applications within VMware Cloud Director. App Launchpad is a free plug-in for VMware Cloud Director that provides a user interface to easily access and launch applications from VMware Cloud Director content catalogs. Using App Launchpad, developers and DevOps engineers can launch applications to…

-

VMware Cloud Architecture Toolkit 5.0 is here!!

Thanks to the great efforts of my colleague Martin Hosken, VMware Cloud Architecture Toolkit (Known as vCAT) is updated to version 5.0. vCAT has always been a great guide to our Cloud Providers on how to best architect/build their Cloud Providers stack. vCAT 5.0 is no exception and include tons valuable information for our Cloud…

-

VMware Cloud Director App Launchpad 1.0 Step by Step Installation

As the VMware Cloud Director has been evolving and adding features to help cloud providers better serve their customers, and help them target new markets, we are introducing Cloud Director App Launchpad bundled as a free extension with Cloud Director 10.1. App Launchpad will allow the Cloud provider to offer a Curated catalog of applications…

-

The VMware Cloud Director appliance deployment fails when you enable the setting to expire the root password upon the first login

As I have been trying to deploy the vCloud Director 10.1 in my home lab, I have faced the below errors, and I wanted to share with others the resolution in case you are facing it and have missed it in the release notes. “No nodes found in cluster, this likely means PostgreSQL is not…

-

How to Change VMware NSX-T Manager IP Address

There is often the situation where you need to change the IP addresses of your NSX-T Managers. For example, you might be changing your IP schema as I am doing currently in my home lab. NSX-T does not have a field to change the IP address of it’s NSX Managers, but you will need to…

-

VMware Cloud Director 10.1 is here!

VMware Cloud Director 10.1 has just been released and ready for you to try! As we have got used to in the past few releases of vCloud Director, the amount of features added in each new release is substantial even in minor releases. VMware Cloud Director 10.1 is no different and comes with plenty of…

-

Google Cloud joins Azure and AWS in offering VMware Cloud

VMware had been working hard lately on executing their vision: “Build, Run, Manage, Connect and Protect Any App on Any Cloud on Any Device.” If you have spoke to any of my colleagues lately or attended VMworld or any other VMware event, I am sure you have heard it or a slightly modified version of…